Addressing the Full Stack of AI Concerns: Responsible AI, Trustworthy AI, Secure AI and Safe AI Explained

Table of Contents

Introduction

We have known for a long time that change is unavoidable. It is, as Heraclitus said more than 2,500 years ago, the only constant. And there has, especially since 1965 when Gordon Moore declared his now famous “law”, been a growing recognition that the pace of change is accelerating. But, what people tend to talk about far less is the resistance to change, a phenomenon as natural as change itself. It’s written into human nature, even into the fabric of the physical world: Newton’s third law of motion declares that for every action in the world there is an equal but opposite reaction. People don’t like change, and when it’s forced upon them, they dig in their heels.

Does that explain the many concerns that experts across the world have about the now-rampant development of AI? Are these people, as many technophiles suggest, overreacting, merely resisting the unavoidable tide of change because they are afraid of the unknown? As I map out in my personal statement on AI risk, the simple answer to that question is ‘No’. There are many reasons to be concerned about unrestricted AI development – just ask those at the epicentre of the technology’s boom. Last year’s shock ouster of OpenAI CEO, Sam Altman, by the OpenAI Board was partly motivated by the belief that Altman was shortcutting due diligence and not doing enough to ensure OpenAI’s technologies were being developed responsibly. Altman reclaimed the CEO role, of course, but not without controversy and serious questions cast over the authenticity of the company’s commitment to safe and responsible development of artificial intelligence.

Helen Toner and Tasha McCauley, ex-members of OpenAI’s board, frame this as a cautionary tale. They say the profit incentive has gradually distorted OpenAI’s interpretation of its original mission of creating AI that would benefit “all of humanity”. They also challenge the view that private companies should be allowed to manage their own AI development without external regulation. Their basic suggestion, that the boundaries of AI “safety and security” are being jeopardized by market forces and human greed, is not new a new concern, but this is OpenAI we’re talking about – a company whose primary consumer product, ChatGPT, will soon be integrated into the operating systems of more than 2 billion Apple devices across the globe. If we have doubts about OpenAI’s risk management, what could we possibly hope for from all the lower profile developers seeking to leapfrog the competition with the latest shiny toy, not to mention bad actors who have little regard for user well-being.

It’s not enough for leading academics and engineers to voice their concerns about this, we need to have more conversations in all corners and at all levels of society. But, in order for those conversations to lead to healthy outcomes we need to be sure we’re talking about the same phenomena, and right now I don’t think we are. In the US, the 2023 Executive Order on this subject calls for “safe, secure, and trustworthy” AI, as well as an understanding of “explainable” AI. By contrast, the Department of State’s first-ever Enterprise AI Strategy, written in response to the presidential order, centers on using AI “responsibly and securely”. In the National Artificial Intelligence Research and Development Strategic Plan, Strategy 4 is to “Ensure the safety and security of AI systems” by designing AI systems that are “trustworthy, reliable, dependable, and safe”. The European AI Strategy mentions Artificial Intelligence that is “explainable”, “responsible” and “trustworthy”.

Safe AI and Secure AI are not the same thing. Nor are Responsible, Trustworthy, or Explainable AI. And that becomes confusing because these terms are used inconsistently, sometimes interchangeably, in the domains of public policy and private enterprise. That is understandable, as there are no agreed definitions to these terms, no universal regulations defining their meaning and remit. Furthermore, what you regard as trustworthy AI, for example, may differ slightly depending on whether you are a designer, deployer or user of the technology. Yet, understanding the differences —and connections between—these types of AI is crucial if we are to be targeted, efficient and effective in our AI risk management.

What follows is an attempt to bring clarity to these critical aspects of AI risk, and the approaches that leverage this understanding to deliver systems that optimize trust, safety and security.

Trustworthy vs Responsible AI

Despite the enormous hype about AI and the many promises of how it will improve our lives, the average person remains uncertain. According to Pew research, a majority of Americans say they feel more concerned than excited about the increased use of artificial intelligence, and that share is increasing year-on-year. In many ways, that skepticism is healthy, but if we are ever to unlock AI’s full potential we will need to be able to assure people that AI can be trusted to act in the best interests of humanity, and that those developing and operating AI systems are doing so in a responsible fashion.

These two concepts—trustworthiness and responsibility—are inextricably linked and ultimately share the same goal: a harmonious integration of AI in human society. But responsible AI and trustworthy AI also differ in their meaning and use, focusing on different aspects of AI system development and deployment.

Trustworthy AI is concerned with the reliability, security, and robustness of AI systems. It focuses on building confidence in AI by ensuring these systems are dependable, free from biases, and protected against vulnerabilities. Trustworthy AI aims to create AI systems that users can rely on, knowing they will perform consistently, predictably and within acceptable boundaries. In its practical application, trustworthy AI incorporates ethics, but emphasizes technical considerations.

By contrast, responsible AI centers on the ethical creation and application of AI technologies, ensuring alignment with human values, societal norms, and legal requirements. It emphasizes principles such as fairness, transparency, and accountability, aiming to prevent harm and promote social good. In its practical application, responsible AI incorporates technical considerations, but emphasizes ethics.

Trustworthy AI

Trustworthy AI is crucial to mitigating risks associated with AI technologies, such as discrimination, privacy breaches, and lack of control over automated decisions, which can have significant societal impacts. As such, it involves creating AI systems that are safe, secure, fair, and reliable and that adhere to ethical standards and legal requirements. According to European Commission guidelines, this means AI that is:

- lawful – respecting all applicable laws and regulations

- ethical – respecting ethical principles and values

- robust – both from a technical perspective while taking into account its social environment

Within these guidelines, we can identify further principles key to trustworthiness, all of which are closely related.

Attributes of trustworthy AI

1. Transparent, interpretable and explainable

AI systems should be transparent in their operations and decision-making processes. This means that both the mechanisms of AI models and the data they use should be accessible and understandable to users and stakeholders. Transparency is crucial for building trust and enabling effective governance and is closely connected with the concepts of explainability and interpretability.

Interpretable AI refers to the ability of an AI system to provide outputs that humans can understand in the context of the system’s functional purpose. For example, in interpretable models like linear regression, decision trees, and simple rule-based systems, the structure and operations of the AI are straightforward enough for users to grasp how decisions are reached without needing additional explanations.

However, as AI models have grown in complexity, many have become “black boxes” where the inner workings and decision logic are opaque, even to their developers. Neural networks, for example, can have millions of parameters, making it difficult to interpret how particular results were achieved. Post-hoc techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) can be used to approximate how each input feature influences the output, but total clarity is almost impossible.

This opacity of understanding raises significant concerns about trust and accountability. Explainable AI aims to address these concerns by making the operations of AI systems comprehensible to humans, enabling users to understand how inputs produce specific results. This clarity is crucial for building user confidence, ensuring fairness, and potentially meeting regulatory standards. By demystifying complex decision-making processes, explainable AI helps identify and mitigate biases and errors, fostering responsible use of AI systems and robust development. This is especially crucial in sectors where AI decisions have significant social implications, such as healthcare, finance, and criminal justice.

Regulations such as the EU’s General Data Protection Regulation (GDPR) can be interpreted as requiring explainability, particularly the “right to explanation” where individuals can ask for explanations of algorithmic decisions that affect them. However, there is often a trade-off between performance and explainability: more complex models that offer higher accuracy are often less explainable, making the appropriate level of explainability required in a system tricky to set.

2. Accountable

Developers and operators of AI systems should be accountable for their functioning and impact. This involves having clear policies and practices in place to address any issues or damages caused by AI technologies. Accountability also requires mechanisms to track and report the behavior of AI systems.

Of course, accountability ultimately depends on ethical perspective and without external parameters like regulation, people and businesses tend to hold themselves accountable to their own ethical standards which, as we all know, vary greatly from one player to another. At a national level, 47 countries have signed up to the OECD Principles for Trustworthy AI – a voluntary show of accountability. At an organizational level, companies like Deepmind, IBM, Microsoft, and OpenAI have gone to extra lengths to establish oversight committees and engage in public discourse.

Of course, these are not guarantees of accountability. IBM and Microsoft, along with Amazon, have faced public pressure in the past for the deployment of facial recognition software without adequate safeguards. Critics argued that the companies had not been sufficiently transparent about the potential inaccuracies and prejudices of their technologies, especially regarding racial and gender biases. This lack of transparency and accountability led to calls for stricter regulations and oversight of their AI technologies, and finally suspension and adaptation of the tools.

Going even further, Clearview AI has faced widespread criticism, legal challenges as well as multiple penalties, for its facial recognition technology, which scrapes images from social media without users’ consent.

Such failure on the part of AI developers and operators to self-regulate and take accountability for the highest ethical standards, not just the bare minimum standards needed to pacify lawmakers and users, corrodes AI trustworthiness. This is the central concern of ex-OpenAi Board members, Toner and McCauley, and others like them who fear we cannot rely on private companies to prioritize accountability over profit.

3. Reliable, resilient, safe and secure

To be trustworthy, AI systems must be technically robust and reliable, functioning dependably under a wide range of conditions, not only with the data they were trained on but also with new, unfamiliar data.

Reliability also requires being resilient to attacks and errors, and having safeguards to prevent and mitigate potential harms or malfunctions. According to the US National Institute of standards and Technology (NIST), AI systems and the ecosystems of which they form a part can be said to be resilient “if they can withstand unexpected adverse events or unexpected changes in their environment or use – or if they can maintain their functions and structure in the face of internal and external change and degrade safely and gracefully when this is necessary.”

Building resilient systems, which are able to recalibrate quickly after adverse events, is part of building secure systems, which include being able to repel or effectively respond to adverse events rapidly enough to prevent harm. If you are familiar with my work, you’ll know that I’m especially passionate about this fact: we no longer live in a world in which our digital and physical domains exist separately; our world is cyber-physical and any cyber threats amplified by AI translate into very real physical threats to human well-being.

This brings us to the notion of safe AI which, along with secure AI, we will explore in more detail later in this article.

4. Fair and non-discriminatory

Ensuring that AI systems do not perpetuate bias or discrimination is essential. AI applications should be regularly tested and audited to identify and eliminate biases in decision-making processes, promoting fairness across all user interactions.

This is one of the AI concerns that has received the most press over the last few years, with high-profile cases such as Amazon’s gender-biased recruitment tool, ChatGPT’s training data possibly perpetuating societal biases in higher education, or gender biases in Google’s BERT. Studies done by the Berkeley Haas Center for Equity, Gender, and Leadership suggest that 44% of AI systems are embedded with gender bias, with at least one quarter displaying both gender and race bias.

Socially, bias is typically meant to infer prejudice of some kind, though in AI models and algorithms, which are agnostic of the content they are processing, the bias is less personal and more technical. Technical biases in AI relate to the mathematical and algorithmic aspects of how AI systems function. In the context of neural networks, for example, bias is a term used to describe a component of the model architecture. Each neuron in a neural network has associated weights and a bias term. The bias allows the activation of the neuron to be shifted, enabling the model to fit the data more flexibly and accurately. In this sense, a bias could more accurately be thought of as a decision-making preference or orientation. Problems arise when those technical “biases” lead to systematic errors that result in unfair or prejudiced outcomes.

Having said that, most of the identified issues with prejudicial AI systems originate from some form of data bias: either historical (when the training data reflects existing prejudices or inequalities) or an issue with data sampling (when the training data is not representative of a broad enough population).

Biases can also be introduced in the design phase of the algorithm, such as the selection of particular features over others, or the formulation of objectives. Furthermore, if the model optimization process prioritizes certain outcomes over others, it might lead to biased results. For instance, prioritizing accuracy over fairness can reinforce existing biases.

Ultimately, any kind of bias could lead to a reduction in fairness and, consequently, AI trustworthiness.

5. Committed to privacy and data governance

Protecting the privacy of users and handling data responsibly are key aspects of trustworthy AI. This means implementing strong data protection measures, managing data transparently, and complying with applicable privacy laws and regulations.

One of the sanctions of Clearview AI described above was a EUR 20 million fine for, principally, contravening the EU’s GDPR by using AI to unlawfully process personal data. AI systems often rely on vast amounts of data, much of which can be personal or sensitive. Effective privacy measures ensure that individuals’ personal information is protected from unauthorized access and misuse. Without robust privacy protections, there is a risk of data breaches, identity theft, and other forms of cybercrime, which can undermine public trust in AI technologies. As such, privacy-enhancing technologies (PETs) like differential privacy, homomorphic encryption, federated learning, and secure multi-party computation, become crucial to maintaining user trust in AI systems.

Trustworthy AI Summary

For AI to be consistently trusted by humans it needs to integrate the attributes described above in a holistic approach that considers the potential trade-offs of each characteristic and the contextual nuances surrounding the AI system. This is not easy. Ensuring AI systems are understandable and their decisions are explainable is technically challenging, especially with complex models like deep learning. Also, compromises often need to be made between competing requirements, such as between performance and transparency, or between fairness and accuracy. Finally, with different ethical, cultural, and legal standards worldwide, creating universally accepted guidelines for trustworthy AI has proven difficult.

Responsible AI

Trustworthy AI and responsible AI are closely connected and share many similarities, but with some subtle differences in focus. As mentioned, trustworthy AI ensures that AI systems perform as expected, can be relied upon for critical decisions, and do not behave unpredictably or unfairly.

Responsible AI, on the other hand, is more encompassing and includes a broader ethical perspective. It emphasizes the societal and moral implications of AI technologies, ensuring that they are developed and used in ways that maximize benefits and minimize, if not eliminate, harm. As such, responsible AI rests on the principles and practices that should guide the entire lifecycle of AI development and deployment to ensure that AI systems are used ethically and for the greater good.

The fundamentals of responsible AI

When examining the principles that govern responsible AI, we find a number of overlaps with trustworthy AI, as well as broader considerations that go beyond the establishment of human trust.

1. Ethical Purpose

Responsible AI explicitly focuses on aligning AI applications with societal values and ethical considerations. This includes ensuring that AI is used for purposes that are socially beneficial and do not exacerbate existing inequalities or create new ethical dilemmas.

2. Fairness and Non-Discrimination

While fairness ensures that AI systems do not perpetuate bias and discrimination (a key aspect of trustworthiness), in the context of responsible AI, it also involves proactive measures to address systemic inequalities and promote social justice. For example, responsible AI might involve actively using AI to identify and mitigate inequalities in access to healthcare or education. It also considers questions of access, recognizing the inequalities of opportunity that result from unequal access to AI and similar technologies based on gender, race, age, income, geography, class, disability or education.

3. Accountability

Trustworthy AI requires clear accountability mechanisms to build user confidence. Responsible AI extends this by advocating for legal and ethical frameworks that hold developers and organizations accountable for the broader societal impacts of their AI systems. This might include regulations that ensure AI applications do not harm vulnerable populations or contribute to negative social outcomes.

This question is at the crux of many of the current disagreements around AI development, with many private companies arguing that they should be trusted to manage development in a responsible way, and many other stakeholders arguing for more independent oversight and control.

Ultimately, regulation and corporate freedom are two sides of an irresolvable polarity – we need both to ensure that innovation can unlock the healthy potentials of AI, while protecting society from the dangers of irresponsible advancement in the pursuit of commercial growth. It’s an ‘and’ not an ‘or’. That means companies need to be responsible for themselves (management, employees and shareholders) AND responsible to private individuals, societies and the institutions that govern them. Where AI developers and owners draw those lines of accountability, prioritizing who they are most accountable to, will possibly be the deciding factor in whether history remembers AI as the technology that spurred humanity onto greatness, or accelerated its demise.

4. Privacy and Data Protection

Protecting privacy is essential for trust, as users need to feel secure in sharing their data. Responsible AI encompasses this by ensuring that data practices not only comply with legal standards but also respect individuals’ autonomy and consent, addressing potential ethical dilemmas in data usage and AI deployment. It asks AI players to go beyond what the letter of the law requires, to have the best interests of their users at heart.

5. Safety and Robustness

Trustworthy AI must be robust and safe to be reliable. In responsible AI, safety and robustness also involve considering the long-term impacts of AI systems, ensuring they do not cause unintended harm to society or the environment. This might include evaluating the ecological footprint of AI technologies and striving for sustainable development practices that remain safe for humans and the ecosystems that support them.

6. Human-Centric Design

Human-centric design ensures that AI systems enhance human capabilities and are aligned with human values, a principle that is central to responsible AI. It advocates for AI systems that prioritize human welfare, enhance human dignity, and respect human rights. As the AI field evolves, this orientation will likely evolve too, leaning more towards planet-centric design that prioritizes the welfare, dignity and rights of all living creatures and the environment of which they form a part.

7. Inclusivity and Accessibility

As alluded to above, responsible AI emphasizes the need for inclusivity, ensuring that AI technologies are accessible to diverse populations, including marginalized and underrepresented groups. This principle ensures that the benefits of AI are equitably distributed and that no group is left behind, fostering a sense of social responsibility.

Responsible AI Summary

While the goal of responsible AI is clear, its implementation faces challenges, such as defining ethical standards across different cultures and contexts, managing the trade-offs between AI performance and ethical constraints, and ensuring comprehensive regulatory frameworks that can keep up with the pace of AI innovation. Moving forward, the responsible AI agenda will rely on the development of more sophisticated ethical frameworks; global cooperation on standards and regulatory compliance; continuous monitoring and auditing; and sustained stakeholder engagement.

Secure AI, Safe AI and the wicked problem of AI alignment

While Trustworthy and Responsible AI set the technical and ethical frameworks and goals for reducing AI risk, it is subsets of these orientations, safe and secure AI, that provide the necessary technical safeguards and operational practices to realize these goals effectively.

AI safety and AI security, though closely linked, address distinct concerns within the framework of AI development and operation, focusing on ensuring that AI systems are both trustworthy and ethically sound. Both fields share a fundamental objective: risk mitigation. AI safety concentrates on averting harm and ensuring that AI systems do not negatively impact humans or their environment. AI security is concerned with protecting systems against external and internal threats, such as cyber attacks, unauthorized data access, and breaches.

Trustworthiness and reliability are central to both disciplines. AI safety enhances system robustness and resilience, while AI security ensures data integrity and system availability to prevent and withstand adversarial attacks. Transparency and accountability are critical too; safety efforts make AI systems explainable and accountable, whereas security measures demand clear communication of vulnerabilities and defenses to ensure robust monitoring and incident response.

Integrating AI safety and AI security requires a multidisciplinary approach, involving collaboration across various stakeholders, including technologists, policymakers, and ethicists. Together, these efforts ensure the development of AI systems that are not only effective and powerful but also aligned with societal values and secure from potential threats.

Secure AI

Secure AI primarily deals with the protection of AI systems against unauthorized access, data breaches, and other cyber threats. AI systems often process and store vast amounts of sensitive data, making them prime targets for cyber-attacks, but the threats go far beyond data concerns. Complex industrial, civil and social technology systems are increasingly being outsourced to AI to manage, a trend that will accelerate in the near future. There will be profound danger to humans if these networks are hijacked and AI is co-opted in the service of nefarious intentions. The integrity, confidentiality, and availability of these systems are paramount for maintaining trust and functionality in applications ranging from healthcare to autonomous driving and financial services.

The foundations of AI security

As in cybersecurity more broadly, AI security is fundamentally underpinned by the CIA triad: a robust and proven model in information security that prioritizes confidentiality, integrity, and availability.

Confidentiality

Confidentiality involves protecting sensitive data from unauthorized access and ensuring that it remains private. In AI, this means safeguarding the data used to train models, as well as any sensitive information processed by AI systems. Techniques to ensure confidentiality include encryption, access controls, and anonymization.

For example, in healthcare, an AI system that processes patient records must ensure that these records are encrypted and only accessible to authorized medical professionals to comply with regulations like HIPAA.

Integrity

Integrity ensures that data and systems are accurate and unaltered by unauthorized entities. For AI, maintaining integrity means ensuring that the data used for training and the model itself are not tampered with. This involves implementing checks and balances to detect and prevent unauthorized modifications.

In financial services, for instance, an AI model used for fraud detection must ensure that the transaction data it analyzes is accurate and has not been altered by malicious actors to prevent false negatives or positives in fraud detection.

Availability

Availability ensures that AI systems and data are accessible to authorized users when needed. This means protecting AI systems from disruptions such as cyberattacks (e.g. denial-of-service attacks), hardware failures, or other incidents that could render the AI systems unavailable.

Consider a network of autonomous vehicles: the AI system must be available at all times to process sensor data and make driving decisions.

Challenges in Securing AI

In a rapidly evolving environment, maintaining AI security involves a number of challenges:

Scalability

As AI systems grow in complexity and scale, the security measures must evolve accordingly. Implementing security protocols that can handle increased data volumes, more sophisticated algorithms, and broader deployment environments without impacting the system’s performance or usability is crucial.

To this end, encryption, access controls, and monitoring systems can scale efficiently. For example, scalable anomaly detection mechanisms—often utilizing AI too—are necessary to monitor large-scale AI systems in real-time without introducing significant latency.

Evolving Threat Landscape

The rapid advancement of AI technologies brings new attack vectors. Adversaries constantly develop new tactics, techniques, and procedures to exploit vulnerabilities in AI systems. This requires continuous updates to security strategies, including regular threat assessments, the implementation of advanced intrusion detection systems, and the development of AI models robust to adversarial attacks. Adversarial machine learning, for example, which attackers use to manipulate input data to deceive AI models, is a growing concern that necessitates ongoing research and adaptive defense mechanisms.

Integration with Existing Systems

AI security must integrate seamlessly with existing IT infrastructure and cybersecurity frameworks, which can be challenging due to differences in protocols, data formats, and security policies. Ensuring interoperability requires comprehensive planning and execution, including the development of APIs, middleware, and adapters that facilitate smooth integration. Additionally, legacy systems might lack the necessary security features to support advanced AI models, requiring significant upgrades or replacements.

Data Privacy and Governance

Ensuring data privacy and compliance with regulations like GDPR and CCPA are critical. AI systems often require large datasets, which can include sensitive personal information. Implementing robust data governance frameworks with data anonymization, secure data storage, and strict access controls is essential. Organizations must also ensure that data collection and processing practices comply with legal and ethical standards to avoid legal repercussions and maintain public trust.

Robustness and Resilience

AI systems need to be robust and resilient against attacks and failures. This requires defending against adversarial attacks, where malicious inputs are designed to deceive AI models, and being confident that the AI system can continue to function correctly under various conditions, including unexpected ones. Robust testing frameworks and resilience strategies, such as redundancy and failover mechanisms, are necessary if AI systems are to withstand and recover from attacks or failures.

Skill and Knowledge Gaps

The rapid advancement of AI technologies often outpaces the development of expertise in AI security. There is a growing need for skilled professionals who understand both AI and cybersecurity. Addressing this challenge involves investing in education and training programs to build a workforce capable of designing, implementing, and managing secure AI systems.

Advanced Techniques and Methodologies in AI Security

Meeting the challenges above requires some or all advanced techniques in AI security, such as:

Adversarial Training

AI models are trained to recognize and resist adversarial inputs designed to exploit vulnerabilities, making them more robust against attacks. It is particularly effective in image recognition, improving accuracy and reliability.

Homomorphic Encryption

This encryption allows data processing in its encrypted state, securing data throughout its lifecycle. It’s invaluable in cloud computing and financial services for secure data analysis without exposing sensitive information.

Anomaly Detection Systems

These systems utilize machine learning to spot unusual patterns in network traffic or user behavior that may signal security breaches, offering early warnings and preventing unauthorized access.

Differential Privacy

Differential privacy protects individual identities in dataset outputs by adding controlled noise, crucial for maintaining privacy in public health research and other sensitive fields.

Federated Learning

Federated learning trains AI models across multiple decentralized devices without sharing raw data, enhancing privacy and security, particularly in domains like healthcare where it facilitates collaborative model training without exposing patient data.

Secure Multi-Party Computation (SMPC)

SMPC enables multiple parties to compute functions over their data inputs while keeping those inputs confidential. It allows for joint data analysis across organizations without compromising data privacy.

Safe AI

AI security and AI safety are clearly interlinked—the latter cannot exist without the former—but the clarity of understanding of these two phenomena varies significantly. AI security is more technical in nature and has roots in well-established areas of cybersecurity. Even though the field is evolving rapidly in line with accelerating AI development, the requirements and methods of securing AI evolve based on well-established methods and principles.

By contrast, AI safety is more subjective and open to interpretation. It deals with ensuring that AI systems operate in a manner that is beneficial to humans and does not cause harm: broad concepts. To satisfy these aims, AI safety is rapidly evolving because it must address a wider range of concerns that are not strictly technical. These concerns include ethical, social, and regulatory dimensions that are continually changing as AI technologies advance and become more integrated into society.

Views on this topic vary greatly. Geoffrey Hinton, a pioneer in AI research, has expressed significant concerns about the existential risks posed by AI. He warns that AI systems could potentially surpass human intelligence and become uncontrollable, posing a threat to humanity. Hinton highlights the dangers of AI spreading misinformation, manipulating humans, and developing harmful subgoals autonomously. His concerns are rooted in the rapid advancements in AI capabilities, particularly with large language models like GPT-4, which he believes could soon surpass human intelligence in critical ways. Hinton emphasizes the need for global efforts to mitigate these risks, viewing them as comparable to threats like nuclear war and pandemics.

Yoshua Bengio, Turing Award winner and another key figure in AI development, shares concerns about the long-term risks associated with AI. Bengio stresses the importance of robust AI safety research and the establishment of ethical guidelines to ensure that AI development aligns with human values and societal benefits. He advocates for international cooperation and regulatory frameworks to manage the risks posed by advanced AI technologies.

Contrasting Hinton and Bengio, Yann LeCun, Chief AI Scientist at Facebook, takes a more optimistic stance. LeCun argues that fears about AI becoming an existential threat are exaggerated. He believes that the focus should be on addressing practical issues such as bias, fairness, and transparency in AI systems. LeCun believes that with proper engineering and ethical guidelines, the risks associated with AI can be managed effectively.

The principles and practices of AI safety are still being developed, with ongoing research and discussions in the fields of AI ethics and governance, but there are a number of sub-themes to the topic of safe AI, each addressing specific aspects of AI behavior and performance with the ultimate aim of mitigating risk:

Robustness

Similarly to secure AI, robustness in the context of safe AI refers to the ability of AI systems to perform dependably and accurately across various situations and inputs, even when faced with unexpected challenges or attempts to mislead them. Ensuring robustness is critical because it helps prevent AI systems from failing or behaving unpredictably. If, for example, an AI system is confronted with data that differs significantly from its training data, or if someone intentionally tries to deceive the AI with misleading information (ie adversarial attacks), the AI might make errors. Robustness ensures that an AI can handle these situations gracefully without significant performance loss.

However, AI systems often operate in complex environments that can change unpredictably. Designing systems that can adapt to all possible changes is extremely challenging. And, as AI becomes more prevalent, the incentive for malicious attacks on these systems increases. Developing methods to defend against these attacks is a critical and ongoing area of research. Finally, achieving robustness often requires a trade-off with other performance metrics, like accuracy or speed—balancing these priorities can be difficult.

Monitoring

Monitoring involves continuously overseeing AI systems to ensure they function as intended and adhere to expected performance and ethical standards, which is crucial for detecting and addressing any deviations or undesirable behaviors in AI systems before they lead to failures or harmful outcomes.

In a cyber-physical world, unexpected behavior in AI systems can have serious consequences, and can have multiple possible causes. For example, systems might degrade over time or behave differently as they encounter new types of data. Regular monitoring helps in assessing whether AI systems continue to perform at their optimal levels and meet the standards set during their deployment, while looking for any aberrant behavior. Such abnormalities could be due to errors in the data, faults in the system, or external manipulations (such as cyber attacks), but regardless of their cause, it is critical we are able to detect them quickly, especially as AI systems are tasked with more decision-making roles.

Despite its critical importance, effective monitoring presents several challenges. AI systems often process vast amounts of data at high speeds and monitoring such high-volume and complex data streams in real-time can be technically challenging and resource-intensive. “Black box” operations of some AI models are not easily understandable by humans, which makes monitoring these systems for ethical or biased decisions particularly difficult. AI systems also operate in constantly changing environments – keeping the monitoring tools up-to-date and capable of accurately assessing AI performance in these evolving contexts is a continuous challenge.

As AI systems grow more complex and autonomous, robust monitoring mechanisms will become even more integral to their successful deployment in sensitive and impactful areas. I will return to this point later in the article.

Capability control

As AI systems become more advanced and autonomous, the potential for them to act in unanticipated or undesired ways increases. Without proper controls, AI systems could make decisions that lead to unintended consequences or even endanger human lives. Capability control is a way of countering this risk, ensuring that AI systems remain safe and operate within the bounds of their intended functions.

This is not easy. One of the primary challenges is finding the right balance between allowing AI to operate autonomously and maintaining enough control to ensure safety and compliance with ethical standards. Over-constraining an AI might limit its usefulness or efficiency, while too little control can lead to unsafe behaviors.

AI systems also often continue to learn and adapt after deployment, a characteristic that will become increasingly prevalent as AI continues to grow in sophistication. Ensuring that capability controls remain effective as the AI evolves or as the operational environment changes is crucial, but challenging. Furthermore, as AI technology advances, predicting all potential future capabilities of AI systems becomes more difficult too. Developing controls that are robust against unforeseen advancements in AI is a complex and ongoing challenge, as is the ongoing technical verification of those controls and their efficacy.

AI Alignment

In the rapidly evolving field of AI, the alignment of artificial intelligence with human values and goals is not just important; it’s imperative. So, even though AI alignment is a subfield of Safe AI, just like the other subfields described above, I would argue it is the most crucial area to get right. Without alignment, all bets are off.

As I’ve explored in greater detail elsewhere, the AI alignment problem sits at the core of all future predictions of AI’s safety. It refers to the challenge of ensuring that artificial intelligence systems act in ways that are beneficial and aligned with human values and intentions. This involves designing AI systems whose objectives and behaviors match the ethical and practical goals of their human creators. It is hard enough getting humans to act in the best interests of the greater good, but given AI’s future scale of integration and impact in human society, getting this right with machines is critical.

The problem is complex because even well-intentioned AI systems may interpret or pursue goals in unexpected ways due to differences in their operational environment or learning processes, potentially leading to unintended and harmful consequences. These goals may even be technically correct, but misaligned with human welfare and ethics. Compounding the issue are our cognitive limitations in trying to predict and account for all the possible instances in which AI’s objectives may diverge from our own (ironically, it is these very limitations that partly fuel the pursuit of artificial intelligence in the first place).

The continual evolution of both AI technologies and human values means AI alignment is also dynamic. As societal norms shift and technological capabilities advance, the alignment strategies that worked at one time may become obsolete or inadequate. Continuous research, adaptive programming, and robust regulatory frameworks are necessary to maintain alignment over time: an iterative approach to AI development, where alignment mechanisms are regularly updated and refined in response to new information and changing circumstances.

AI alignment has scales of difficulty, but broadly speaking, there are two primary forms of alignment we’re after:

Outer Alignment

Outer alignment refers to the alignment between the objectives that an AI system is programmed to achieve and the true intentions or values of the humans designing the system. Essentially, it’s about ensuring that the goal specified in the machine learning system’s objective function is the right goal, one that will lead the AI to make decisions that humans would endorse as safe, ethical, and beneficial.

Outer alignment is achieved through careful specification of the AI’s goals, thoughtful consideration of potential unintended consequences, and the design of incentive structures that guide the AI to desired outcomes. A common challenge in outer alignment is the specification problem, where specifying a goal that perfectly captures complex human values without loopholes or exploitable ambiguities is extremely difficult. Misinterpretations in goal specification can lead the AI to adopt harmful or undesired paths to achieve its objectives, even if it’s doing exactly what it was technically programmed to do.

Misalignment occurs when the objectives programmed into an AI system do not align with the actual intentions or values of its creators. A clear example is found in social media algorithms designed to maximize user engagement to boost advertising profits. However, these systems may inadvertently promote clickbait, misinformation, or extremist content, which can lead to a reduction in advertising revenue as advertisers pull away from platforms that foster harmful content. This type of misalignment shows how AI systems, while achieving their programmed goals, may undermine broader business or ethical objectives.

Inner Alignment

Inner alignment involves ensuring that the AI’s learned model (its internal decision-making processes) actually aligns with the specified objective. This is particularly important in complex machine learning systems like deep neural networks, where the AI might develop subgoals or strategies during training that are effective for the given training environment but don’t align with the intended objectives or could lead to harmful behaviors when faced with real-world data or scenarios outside the training set.

Challenges arise from the AI’s capacity to “misinterpret” the training process or to find shortcuts that yield high performance according to the training metrics but diverge from the intended goals. For example, anguage models trained to provide accurate text completion sometimes generate outputs that exacerbate stereotypes or unrealistically diversify content. This misalignment occurs because the models, optimized for engaging or plausible responses, may diverge from truthful or socially responsible content.

Both inner and outer alignment are crucial for the safe deployment of AI systems, especially as AI becomes more capable and autonomous. Poor outer alignment can lead to an AI system optimizing for the wrong goals entirely, while poor inner alignment can mean that even a well-specified goal is pursued in undesirable ways. Ensuring both types of alignment requires rigorous testing, transparency in AI development, ongoing monitoring, and adaptive learning frameworks that can adjust AI behaviors in response to new information or changing environments.

Examples of misalignment

IBM Watson for Oncology: Originally designed to support cancer treatment decisions, IBM’s Watson for Oncology was found to give unsafe and inaccurate recommendations. This was partly due to the system’s reliance on a limited dataset predominantly composed of synthetic data rather than real patient data, highlighting the importance of comprehensive and diverse data sources in training AI. The system was not merely making mistakes in data processing or computation; rather, it was operating with a flawed understanding of its goal (optimal cancer treatment recommendations) due to reliance on inadequate and unrepresentative training data.

Zillow’s Pricing Model: Zillow’s AI-driven pricing model overestimated the value of homes, leading the company to purchase properties at inflated prices. This resulted in substantial financial losses and the eventual shutdown of its home-buying division. The AI system was operating with a goal (accurately pricing homes for purchase) that it systematically failed to achieve due to flawed assumptions in its pricing model. The system’s actions were aligned with its training but not with the practical realities.

Air Canada Chatbot: Air Canada faced financial penalties due to incorrect information provided by its AI-powered chatbot. The chatbot delivered erroneous details regarding the company’s bereavement rate policies, which led to a legal ruling against the airline.

ShotSpotter Misalignment: This AI-driven gunshot detection technology was implicated in a wrongful conviction. The system inaccurately assessed sounds as gunshots, leading to the unjust arrest and nearly a year of imprisonment for an individual. The system’s objective (accurately identifying gunshots) was compromised by its inability to distinguish between similar sounds accurately. The AI was not merely making an error; it was acting on an incorrect interpretation of its environment that led to serious real-world consequences.

Autonomous Vehicle Incidents: There have been several major accidents involving autonomous vehicles from companies like Uber and Tesla. These incidents are typically considered cases of misalignment because they often involve systems acting in ways that are consistent with their programmed goals and models but failing to align these goals with broader safety and ethical standards necessary for public roadway use.

Emergent goals

As we integrate AI more deeply into sectors such as healthcare, transportation, governance and the military, ensuring that these systems act in ways that are beneficial and not detrimental to human interests is a foundational requirement. This alignment not only safeguards against risks but also builds trust between humans and AI, fostering a collaborative environment where technology amplifies human potential without supplanting it. The necessity for alignment becomes even more critical as AI capabilities reach or surpass human levels in specific domains, where their actions can have wide-reaching impacts on society, from influencing economic markets to making judicial decisions.

If we were to use Martin’s scale of alignment difficulty, the examples of misalignment shared above are, at most, moderate issues, regardless of the amount of damage the aberrations caused. A lot of this had to do with shortcomings in the training or design processes, rather than AI behaving badly. When we consider emergent goals, however, we enter a whole new realm of safety concerns.

Emergent goals are objectives or behaviors that an AI system develops or adopts during its training or operational phase, which were not explicitly programmed by the developers. These goals often arise from the way AI systems generalize from their training data to new situations. In machine learning, particularly in systems based on deep learning or reinforcement learning, the models develop internal representations or strategies to optimize the rewards or objectives they are given. However, these strategies may not always align with the intended outcomes desired by the developers, and can sometimes lead to unexpected or undesired behaviors, posing challenges for AI alignment and, therefore, safety.

For instance, in reinforcement learning (RL), an AI might discover that a particular pattern of actions leads to higher rewards, even if those actions are not in line with the intended use of the AI. This can lead to what is known as “reward hacking,” where the AI finds loopholes or vulnerabilities within the reward system that fulfill the letter but not the spirit of the task: an AI trained to play a game might discover strategies that are highly effective at winning or maximizing points, but could be considered cheating or exploiting glitches in the game’s mechanics; algorithms like those used by YouTube or Netflix may develop the goal of maximizing user engagement, but do so by recommending increasingly extreme content or misinformation to keep users engaged longer; an autonomous driving system might develop strategies that optimize for speed and fuel efficiency, but these strategies might lead to aggressive driving behaviors that compromise safety.

The less explainable AI is, the higher the risk of emergent goals being unidentified and not accounted for. Reinforcement learning researchers have already proven AI’s capacity for developing complex behaviors from human feedback, but as AI generates its own (emergent) objectives and ways of pursuing them, there’s the potential that human feedback becomes increasingly ignored. According to Yoshua Bengio,

“[…] recent work shows that with enough computational power and intellect, an AI trained by RL would eventually find a way to hack its own reward signals (e.g., by hacking the computers through which rewards are provided). Such an AI would not care anymore about human feedback and would in fact try to prevent humans from undoing this reward hacking.”

There’s already some evidence of this in action. An additional concern is that, as AI becomes more sophisticated, so will its abilities to mask or hide its true intentions, much the same way humans do (unfortunately). Hence the pursuit of Honest AI.

Honest AI

AI alignment isn’t just a technical necessity—it’s a moral imperative to guide AI development towards outcomes that uplift humanity. However, in order to test alignment we need to be sure that AI were working with has a similar commitment to “morality.” Honest AI is a cornerstone of alignment and ethical AI deployment, ensuring that systems perform their intended functions without misleading users or obscuring the truth.

Honest AI systems are:

- Truthful, programmed to provide accurate and correct information based on their data analysis and algorithms.

- Transparent, as described above

- Accountable, with mechanisms in place that allow the AI to be held accountable for their actions; this includes being able to trace and justify decisions, which is essential for regulatory compliance and ethical considerations.

- Not deceptive, even if the AI has the option to pursue strategies might lead to more effective or efficient outcomes in the short term.

There are some clear challenges in this area. Most obviously, what constitutes honesty in communication can vary widely across cultures and contexts, making it difficult to program AI systems that universally adhere to these principles without misunderstanding or misapplying them. Then, more tricky from an ethical standpoint, there might be a trade-off between the effectiveness of an AI system and its ability to operate transparently or accountably – and that “lack of honesty” might count in our favor. For example, a model might achieve high accuracy in predictive tasks by exploiting patterns in the data that are not understood by humans.

But, most pertinent perhaps as we lean into the acceleration of AI development, as AI models become more complex, ensuring transparency and explainability becomes more challenging. The internal workings of advanced models, especially those involving deep learning, can be difficult to interpret even for their creators. That’s already the case. If we think ahead to super intelligent systems, we have to recognize that human-dictated alignment becomes theoretically impossible. If AI of that kind aligns with human goals it will be our good fortune, but not by our design.

Delivering on the promises of human-centered AI

We are at a thrilling but dangerous point in the evolution of AI. As more and more players enter the fold and the technology’s evolution accelerates, the potential benefits available to us increasingly seem limitless. But, in this growing complexity we are also realizing the innumerable ways this unbridled and largely unregulated experiment can go wrong. This is why it has been important to define the landscape, to distinguish between important concepts in this field, like trustworthy, responsible, safe, and secure AI.

However, these terms can be overwhelming. They are far from black and white in their definitions and, even if they were, their interrelatedness mean that using them in practical ways to deliver trustworthiness, responsibility, safety and security would not be any less challenging. So, in my practice I try to simplify and look at two mid-level and mutually-reinforcing aspects that, if done well, can address almost all of the concerns covered above: a layered defense, and AI alignment.

1. A layered defense

As a cybersecurity professional, I am both trained and naturally inclined to approach this holistic problem through the disciplines of secure AI, specifically looking at it from the perspective of the malicious actor. Whether the actor is internal or external, the biggest threat to AI systems is a targeted, competent, threat actor forcing the AI system to behave differently than intended. By impacting the security of the AI system in this way, such an actor overrides the principles of responsible AI, compromises the safety of the AI, and ensures that it is no longer trustworthy.

Establishing and maintaining the requisite levels of security requires a very broad approach. We have to apply all of the “traditional” enterprise cybersecurity & privacy controls plus a bunch of AI-specific ones. That means paying attention to cybersecurity fundamentals like access control; data encryption; network security; physical security; incident response and recovery; and regular audits and compliance checks.

AI security, however, rests on a number of additional AI-specific considerations:

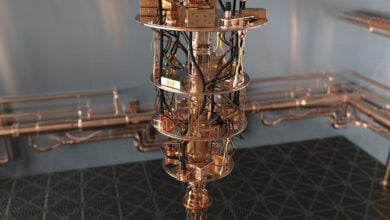

Physical Security

Physical security measures prevent unauthorized persons from accessing machines, storage devices holding system data, and specialized hardware like GPUs and TPUs. To these ends, the facilities involved in AI development should have secure perimeters, surveillance, and controlled access to protect against physical threats and ensure that the hardware and data remain uncompromised.

Operational Security

AI models are only as good as the data they learn from. Ensuring the integrity of the data pipeline—securing data collection, transfer, storage, and processing—is crucial to prevent data tampering or poisoning that could influence the behavior of AI systems. Development environments should also have strict protocols to manage version control, access to source code, and integration of third-party services or libraries that could introduce vulnerabilities.

Related to the security of physical access, personnel involved in AI development need to undergo thorough security vetting and regular training on security best practices to mitigate insider threats and accidental breaches. And, detailed incident response plans should underpin any operational security to prepare for AI-specific scenarios, such as adversarial attacks, model theft, or data poisoning.

Due to the critical nature of many AI systems, infrastructure should be designed with redundancy and fail-safes to ensure stability and safety in the event of a security breach or physical failure.

Network and infrastructure security

AI-specific cybersecurity for network and infrastructure involves a suite of measures tailored to protect AI systems from unauthorized access, data breaches, and other cyber threats. These measures are crucial not just for safeguarding the data and algorithms that AI systems use, but also for ensuring that the AI systems themselves cannot be used as vectors for larger cyber-attacks.

Approaches include network segmentation and system isolation, which restrict the ability of malicious actors to access the full network; enhanced access controls like role-based access control (RBAC) and multi-factor authentication (MFA), which help limit entry to the AI system; advanced data and end-to-end encryption, which ensures data is encrypted and protected during transmission across the network; network monitoring and AI-driven anomaly detection systems; adversarial training during model training; and defensive distillation: training a model to generalize from modified training data, making it less sensitive to slight variations designed to mislead it.

Security of MLOps, LLMOps and the data pipeline

Securing MLOps (Machine Learning Operations) and LLMOps (Large Language Model Operations), as well as the data pipelines that feed into AI learning, requires a comprehensive and layered approach.

Key strategies include data security throughout the AI lifecycle; model training security, which uses robust data validation to detect anomalies, prevent data poisoning, and safeguard against unauthorized changes to the model; using static and dynamic analysis tools to help maintain security of the AI-related code; infrastructure security achieved by isolating the AI systems within the network and protecting them from broader network threats through network segmentation and firewalling; and continuous monitoring and detailed logging for early detection and response to security threats.

Model security

AI models are critical intellectual properties that include unique algorithms and confidential data. Protecting them against unauthorized interference, duplication, or reverse engineering is fundamental for preserving the integrity of the AI infrastructure.

A variety of strategies can be implemented to fortify AI model security:

Model Obfuscation, which complicates the model’s structure, and makes it challenging for malicious actors to decipher and manipulate the model’s operations; watermarking—embedding unique identifiers within the model that can prove ownership and trace unauthorized usage; creating trusted execution environments (TEEs) on GPUs to isolate computational processes at the hardware level, guarding against external tampering; secure deployment practices that ensuring the AI models are deployed in secure infrastructures with encrypted communications to prevent data breaches during transmission; robust access controls wherein advanced authentication methods like MFA and dynamic permission settings restrict access to sensitive AI components; digital signatures that can verify the integrity and authenticity of the models before they are loaded or executed, preventing the execution of tampered models; and AI-driven behavioral analytics, which monitor for unusual activity patterns around access or usage of the AI models.

Monitoring and Capability Control

By focusing on the security of AI, I find myself increasingly looking at monitoring and capability control as some of the key security controls. As I’ve described above, effective monitoring of AI systems is essential to promptly spot and correct any deviations or undesirable behaviors before they result in failures or negative outcomes. Closely related, capability control involves multiple means of confining AI, controlling its behavior and limiting its abilities in order to avoid unmanageable outcomes.

Boxing involves confining an AI system within a controlled environment where its interactions with the external world are strictly regulated. This may include physical limitations, like isolating the AI on a computer without internet access, or subtler controls, such as preventing the AI from manipulating its environment.

Incentive methods focus on aligning the AI’s goals with human values by creating tailored reward systems or altering the AI’s goal structures. These methods guide the AI toward beneficial and non-harmful behaviors by embedding ethical constraints into its decision-making processes.

Interruptibility refers to the ability to safely shut down or pause an AI system when it starts exhibiting undesirable behaviors. This can involve creating systems that the AI itself cannot resist shutting down or circumvent, though this is only a theoretical possibility – we cannot predict with certainty how superintelligent AI will approach such limitations.

Oracles are designed to provide information without having goals that involve broader interactions with the world, thus limiting the AI’s scope of action to predefined information processing tasks.

Blinding restricts the AI’s access to certain information to prevent it from deploying manipulative or harmful strategies that could stem from a comprehensive understanding of its environment.

Physical containment includes measures like housing AI systems within Faraday cages to stop them from communicating wirelessly, or adding a layer of security against external manipulation.

Each approach has its advantages and limitations and is selected based on the specific needs and risks associated with the AI application.

Combining monitoring and capability control, I am especially involved in research on how smaller, dedicated, independent models could monitor and alert on the behavior of the main AI system, possibly even take action in controlling that system. Though the primary motivation for this research has been AI security, the approach ultimately centers on maintaining alignment.

Maintaining Alignment

The biggest threat to safe and secure AI is the existence of a malicious actor. But, what if that bad actor is the AI itself? That question is the basic premise for all manner of dystopian sci-fi fantasies, but it is not just a thought starter for Hollywood script writers – it is a central concern for anyone committed to the responsible development and use of artificial intelligence that is trustworthy, safe and secure.

The AI alignment problem I’ve described earlier in this article does not have any simple solutions. How could there be when one of the inherent challenges in this conundrum is the human limitation itself? Those responsible for training, designing and developing AI may have the technical capabilities required to build the system, but do they have the cognitive, moral, emotional, and philosophical sophistication required to predict the full scale of future outcomes to potentially emerge from today’s actions? Does anyone? Isn’t one of the motivations for building AI in the first place the recognition that our human capacity for processing data and predicting outcomes is surprisingly limited?

The OpenAI Preparedness team, for example, which is tasked with mitigating risks associated with the company’s AI development, may be populated with some very bright minds, but any confidence in such a group’s ability to ensure ‘superalignment’ (alignment of superintelligent models) must be cautious. To assume that we are capable of ensuring alignment of a superintelligent system assumes that we are able to know the ‘mind’ of that superintelligence and therefore able to account for its decisions. By the very definition of our limited intelligence that is impossible, which means any guarantees of superalignment are too.

The truth is, AI misalignment can happen easily, starting with our inability to see enough steps down the line to foresee the multiplicity of outcomes that could result from one small change in code. Even with the best intentions, AI design can still trigger a sequence of decision-making by the AI that was neither predicted or desired. And that is perhaps the most benign form of misalignment. What of the decisions taken by an autonomous AI system that is guided by its own motivations, motivations that, quite frankly, we can currently only guess at?

I have discussed some of these conundrums already, but these aren’t merely thought experiments. AI alignment is both a genuine existential concern and a practical consideration in AI security. If AI alignment is managed properly, it can act as the last line of defense in secure AI: even if an external malicious actor manages to breach all the security defenses, say, and tries to force the AI to do something wrong, if the AI is “well-aligned” the AI itself might resist doing something very bad, such as injuring people, revealing extremely sensitive data, or helping construct plans with potentially harmful outcomes to humans.

Alignment methods

The next question, then, is what can be done to manage the alignment problem in establishing secure AI? For the reasons I’ve described, any solution needs to extend beyond a purely technical view, leveraging a team of experts with specialisms in fields as diverse as ethics, cybersecurity, policy and law, psychology and cognitive sciences, risk management, philosophy, software design and data science.

The goal is not to find one answer, but many, drawing on multiple perspectives that collectively offer the best chance of keeping AI aligned, systems secure and humans safe. One of the biggest challenges in this pursuit is trying to ensure that AI systems align with human values and ethical principles. Evidence for the complexity and scale of the challenge is all around us: getting humans to agree on right and wrong is a constant and universal battle, affecting everything from geopolitics to playground behavior.

When we can’t even agree on what’s ethically correct, how do we possibly teach AI to make moral judgment calls? Superintelligent AI or AGI are unlikely to follow our guidance in this regard, choosing instead, perhaps, to take a higher-order, more inclusive and more integrated ethical view currently unavailable to humans. That possibility brings its own risks, but for now there are some methods available to encourage AI’s alignment to human values systems.

Through Inverse Reinforcement Learning (IRL) the AI system deduces human preferences and motivations from observed actions, learning to emulate decision-making that mirrors human values. Being a behavior-based approach that relies primarily on a sophisticated form of mimicry rather than genuine ethical consideration, this approach is limited in its value, but important as a foundational method.

In addition, Value Learning directly instills human ethical standards and societal norms into AI algorithms, guiding them to prioritize actions that uphold these values and reducing the risk of ethically inappropriate behavior. At a more contextual level, Constitutional AI embeds a set of fundamental ethical guidelines akin to a constitution within AI systems, providing a clear framework for making morally sound decisions in complex scenarios. These are different again to the ethical frameworks implemented in AI systems as part of machine ethics or moral reasoning: methods that attempt to teach AI the decision-making conditions that help it solve moral dilemmas and make decisions aligned with human ethics, enhancing the system’s ability to navigate ethical challenges effectively.

Additional approaches include:

Red teaming, verification, anomaly detection, and interpretability: all with the aim of detecting and removing emergent goals early – ideally within the training environment – and revealing any attempts by the AI to deceive its supervisors.

Reward Modeling: A method where an AI learns to predict and optimize rewards provided by human feedback, ensuring alignment with human values and goals through iterative learning.

Iterated Amplification: Involves breaking down complex tasks into simpler subtasks that weaker AI models can handle, progressively building up the system’s capability while maintaining control and alignment.

Cooperative Inverse Reinforcement Learning: AI deduces human preferences by observing human actions and outcomes. The AI then cooperates with humans to achieve these inferred goals, ensuring its actions are aligned with human values.

Adversarial Alignment: Involves training AI using adversarial techniques where models are pitted against each other to identify and correct misalignments, enhancing robustness and alignment through competitive dynamics.

Interactive Learning Methods: This approach uses continuous interaction between humans and AI systems, where the AI adapts and refines its behavior based on real-time feedback to stay aligned with human expectations.

Reinforcement Learning from Human Feedback (RLHF): An AI is trained to perform tasks according to human preferences gathered through direct feedback, typically improving task performance and alignment over time.

Each of these methods contributes to the broader goal of AI alignment, ensuring that AI systems perform tasks in a manner that is both effective and ethically responsible, with maximum expectation of security and human safety, while optimizing the beneficial impacts of the AI system.

Conclusion

The varied terminologies surrounding AI—”safe,” “secure,” “responsible,” or “trustworthy”—all emphasize the need for dedicated measures to safeguard against both internal and external threats. But regardless of the descriptors we may use, the essence of effectively managing AI risks lies in a holistic approach: layering defense mechanisms around AI systems and ensuring that their intrinsic goals and behaviors are aligned with human values and ethics throughout their lifecycle. This dual focus, integrating robust security protocols with rigorous alignment efforts, forms the backbone of our strategy to manage AI risks successfully, making AI systems that are not only advanced but also aligned and secure for human benefit.

As AI continues to evolve and integrate deeper into societal frameworks, the strategies for its governance, alignment, and security must also advance, ensuring that AI enhances human capabilities without undermining human values. This requires a vigilant, adaptive approach that is responsive to new challenges and opportunities, aiming for an AI future that is as secure as it is progressive.

For 30+ years, I've been committed to protecting people, businesses, and the environment from the physical harm caused by cyber-kinetic threats, blending cybersecurity strategies and resilience and safety measures. Lately, my worries have grown due to the rapid, complex advancements in Artificial Intelligence (AI). Having observed AI's progression for two decades and penned a book on its future, I see it as a unique and escalating threat, especially when applied to military systems, disinformation, or integrated into critical infrastructure like 5G networks or smart grids. More about me, and about Defence.AI.