Securing Data Labeling Through Differential Privacy

Table of Contents

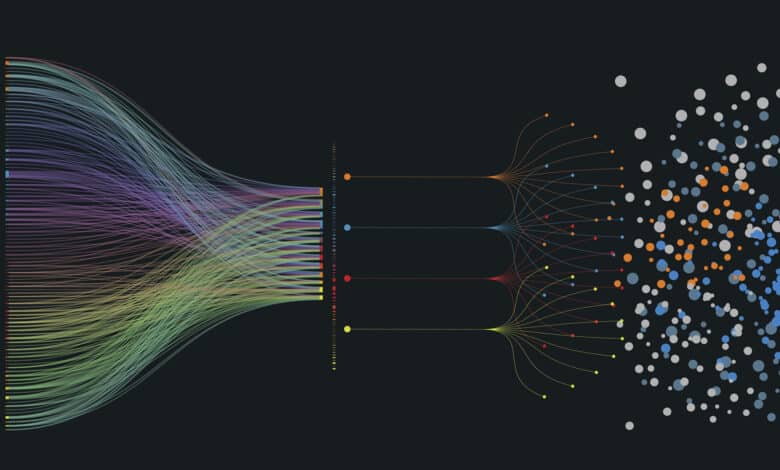

Data labeling plays a critical role, particularly in the context of supervised machine learning. While this process is instrumental in transforming raw data into a structured format that algorithms can learn from, it also necessitates the handling of potentially sensitive or personal information. This is where Differential Privacy comes in. This mathematical framework acts as a safeguard by introducing ‘random noise’ into the data, essentially adding an additional layer of security that makes it statistically challenging to reverse-engineer any sensitive details. Unlike more traditional methods of data protection that require altering the data before it is used, Differential Privacy ensures that individual data remains secure even during real-world analytical queries or complex machine-learning operations. This balance between data utility and privacy makes it an increasingly popular choice for organizations looking to optimize both.

The Importance of Data Labeling

Data labeling is a linchpin in the machine learning ecosystem, particularly for supervised and semi-supervised learning models. Beyond simple annotation, data labeling is an intricate process often entailing various types of annotations ranging from semantic segmentation in computer vision tasks to sentiment analysis in natural language processing. This granularity ensures that models can identify patterns, make decisions, or provide recommendations with high precision.

However, the technical complexities are numerous. Labeling tasks require data governance and quality assurance steps, such as outlier detection and data reconciliation, to ensure the model receives ‘clean’ and accurate information. Also, with the emergence of more advanced algorithms, the requirements for labeled data are becoming more stringent. New types of learning, such as few-shot learning and transfer learning, necessitate meticulously labeled data to produce effective generalized models.

Why Data Privacy Matters in Labeling

Data privacy takes on a heightened sense of urgency in the data labeling sphere due to the very nature of the data being processed. Let’s consider medical records, for example. When labeling MRI scans or patient histories, the data isn’t just sensitive; it’s protected under laws like HIPAA in the United States. Mismanagement of such data could lead not only to privacy infringement but also to legal repercussions.

The problem amplifies when you consider that labeled data often possesses a structure and context that makes it more useful and, therefore, more sensitive than its unlabeled counterpart. In cybersecurity terms, this “enriched” data can be a honeypot for cybercriminals, enticing illicit activities ranging from data theft to ransomware attacks. Moreover, with the advent of distributed data labeling processes, where the labeling workload is shared across different nodes (possibly in different geographical locations), the risk vectors multiply. Each access point becomes a potential vulnerability, thus elevating the need for robust data privacy mechanisms.

Protecting labeled data through sophisticated privacy measures is critical for both the integrity of machine learning models and protection of sensitive information.

Differential Privacy: Bridging Utility and Privacy

Differential Privacy is a privacy paradigm that aims to reconcile the conflicting needs of data utility and individual privacy. Rooted in the mathematical theories of privacy and cryptography, Differential Privacy offers quantifiable privacy guarantees and has garnered substantial attention for its capability to provide statistical insights from data without compromising the privacy of individual entries. This robust mathematical framework incorporates Laplace noise or Gaussian noise algorithms to achieve this delicate balance.

How it Works

At its technical foundation, Differential Privacy is achieved through a noise-adding mechanism. This usually involves implementing a randomized function that employs Laplace or Gaussian noise distribution techniques to alter the query responses on the data. Two critical parameters govern this process: (epsilon) and

(delta). Epsilon represents the privacy loss in the system, and a lower epsilon value provides higher privacy guarantees. On the other hand, delta accounts for the statistical deviation, offering a probability guarantee that the privacy loss will be limited to epsilon with a probability of 1 −

. The process aims to uphold “indistinguishability,” meaning the inclusion or exclusion of any data point should not significantly affect the outcome of any query, thereby preserving individual privacy.

Pros and Cons

Pros:

Robust Mathematical Guarantee: Differential Privacy’s epsilon-delta parameters provide a rigorous, quantifiable measure of privacy loss and statistical deviation. This isn’t an abstract promise but a measurable quantity.

Optimized Data Utility: The noise-adding mechanisms are calibrated to introduce the minimal amount of noise that is statistically necessary for preserving privacy, thereby maintaining as much data utility as possible.

Domain-Agnostic Versatility: One of the strongest attributes of Differential Privacy is its adaptability to various data types, from numerical data in healthcare to textual data in social sciences.

Cons:

Computational Complexity: The intricate mathematics and computational requirements for implementing Differential Privacy can deter small to mid-size organizations that may not have the technical expertise or computational resources.

Data Distortion Risk: While the goal is to minimize distortion, the noise addition can sometimes compromise the data’s utility, especially when privacy parameters are not finely tuned.

Parameter Sensitivity: Incorrectly set epsilon and delta values can jeopardize both data utility and privacy, making parameter selection a critical task that requires expertise.

What Happens if It Fails?

The failure of Differential Privacy to adequately preserve individual privacy could have dire consequences. These range from the unintended disclosure of sensitive information to the erosion of public trust in data-dependent systems and technologies. Legal repercussions could include hefty fines and sanctions, particularly under regulations like GDPR, CCPA, or HIPAA.

Good Practices

For successful implementation of Differential Privacy, organizations must consider several best practices:

Expert Consultation: A multi-disciplinary team, including data privacy experts, legal advisors, and domain-specific experts, should be consulted to correctly implement the privacy parameters.

Parameter Tuning: The epsilon and delta values should be finely tuned after conducting numerous iterations and cross-validations, to harmonize data utility and privacy.

Regular Audits and Monitoring: A continuous review process involving regular audits can help ensure that the privacy measures remain effective and adapt to new types of potential security threats.

Recent Research

The application and impact of Differential Privacy in the realm of data labeling have been substantiated by a series of academic papers. For instance, the study [1] showcases the synergy between Differential Privacy and Generative Adversarial Networks, emphasizing the potential for enhanced privacy and label quality. Another study [2] extends the conversation to large-scale implementations, offering a practical framework for organizations. The trade-offs between data utility and privacy are quantitatively analyzed in the research [3], providing invaluable insights for parameter tuning. In a different setting, a study [4] explores the challenges and benefits of implementing Differential Privacy in crowdsourced data labeling. Also, another research [5] provides metrics to evaluate the impact of Differential Privacy on the machine learning models themselves. Additionally, recent research [6] explores how Differential Privacy can be applied in federated learning systems, thus opening up new avenues for its utility in data labeling in decentralized setups. Collectively, these academic works not only support the practical application of Differential Privacy in data labeling but also broaden the horizons for its future use.

Conclusion

As machine learning and AI continue to permeate every sector, the issues surrounding data labeling and privacy are more crucial than ever. Differential Privacy is a mathematical and versatile tool to bridge the gap between data utility and data privacy. Its role in preserving privacy while maintaining data’s utility makes it increasingly popular in academic research and real-world applications. However, it’s not a silver bullet and requires judicious implementation and regular audits to be effective. In the world of data labeling, the focus on privacy needs to be as sharp as the focus on quality. Balancing the two is a challenging but necessary endeavor to define the future of secure, effective machine learning.

By adhering to rigorous data privacy measures like Differential Privacy, organizations can protect individual privacy and adhere to legal frameworks while continuing to innovate and advance the field of machine learning.

References

- Kossen, T., Hirzel, M. A., Madai, V. I., Boenisch, F., Hennemuth, A., Hildebrand, K., … & Frey, D. (2022). Toward sharing brain images: Differentially private TOF-MRA images with segmentation labels using generative adversarial networks. Frontiers in artificial intelligence, 5, 85.

- Choudhury, O., Gkoulalas-Divanis, A., Salonidis, T., Sylla, I., Park, Y., Hsu, G., & Das, A. (2019). Differential privacy-enabled federated learning for sensitive health data. arXiv preprint arXiv:1910.02578.

- Wunderlich, D., Bernau, D., Aldà, F., Parra-Arnau, J., & Strufe, T. (2022). On the Privacy–Utility Trade-Off in Differentially Private Hierarchical Text Classification. Applied Sciences, 12(21), 11177.

- Wang, Y., Gu, M., Ma, J., & Jin, Q. (2019). DNN-DP: Differential privacy enabled deep neural network learning framework for sensitive crowdsourcing data. IEEE Transactions on Computational Social Systems, 7(1), 215-224.

- Bagdasaryan, E., Poursaeed, O., & Shmatikov, V. (2019). Differential privacy has disparate impact on model accuracy. Advances in neural information processing systems, 32.

- Yu, S., & Cui, L. (2022). Differential Privacy in Federated Learning. In Security and Privacy in Federated Learning (pp. 77-88). Singapore: Springer Nature Singapore.

For 30+ years, I've been committed to protecting people, businesses, and the environment from the physical harm caused by cyber-kinetic threats, blending cybersecurity strategies and resilience and safety measures. Lately, my worries have grown due to the rapid, complex advancements in Artificial Intelligence (AI). Having observed AI's progression for two decades and penned a book on its future, I see it as a unique and escalating threat, especially when applied to military systems, disinformation, or integrated into critical infrastructure like 5G networks or smart grids. More about me, and about Defence.AI.

Luka Ivezic

Luka Ivezic is the Lead Cybersecurity Consultant for Europe at the Information Security Forum (ISF), a leading global, independent, and not-for-profit organisation dedicated to cybersecurity and risk management. Before joining ISF, Luka served as a cybersecurity consultant and manager at PwC and Deloitte. His journey in the field began as an independent researcher focused on cyber and geopolitical implications of emerging technologies such as AI, IoT, 5G. He co-authored with Marin the book "The Future of Leadership in the Age of AI". Luka holds a Master's degree from King's College London's Department of War Studies, where he specialized in the disinformation risks posed by AI.