NIST Launches Trustworthy and Responsible Artificial Intelligence Resource Center (AIRC)

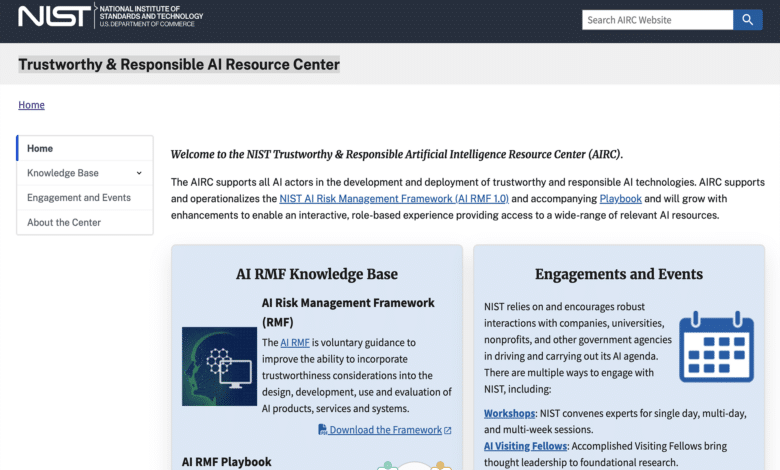

On March 30, 2023, the National Institute of Standards and Technology (NIST) introduced its Trustworthy and Responsible Artificial Intelligence Resource Center (AIRC). This digital hub offers a comprehensive collection of AI-related NIST content, including a specialized AI Playbook, designed to guide companies in the ethical and responsible use of artificial intelligence (AI). The AIRC’s mission is to aid all AI stakeholders in the creation and deployment of AI technologies that are both trustworthy and responsible, ensuring alignment with international standards and incorporating diverse stakeholder perspectives.

The AIRC offers a wealth of resources, with the AI Risk Management Framework (RMF) 1.0 standing out. This framework outlines a set of principles and best practices for managing the risks associated with AI systems throughout their lifecycle. It offers companies a structured approach through its four core functions: govern, map, measure, and manage. Alongside the RMF, the AIRC provides a playbook to aid in the practical implementation of the RMF and AI solutions. The center also features a roadmap detailing upcoming NIST initiatives, a glossary of AI-related terms, and a section dedicated to events and opportunities that align with NIST’s AI objectives. As AI continues to evolve, so will the offerings of the AIRC.

The roadmap within the AIRC pinpoints crucial activities that will further the AI RMF, with NIST collaborating with both public and private sector organizations. These activities are dynamic and will adapt as AI technologies and experiences mature.

For 30+ years, I've been committed to protecting people, businesses, and the environment from the physical harm caused by cyber-kinetic threats, blending cybersecurity strategies and resilience and safety measures. Lately, my worries have grown due to the rapid, complex advancements in Artificial Intelligence (AI). Having observed AI's progression for two decades and penned a book on its future, I see it as a unique and escalating threat, especially when applied to military systems, disinformation, or integrated into critical infrastructure like 5G networks or smart grids. More about me, and about Defence.AI.